Problem definition

Automated mobility and other future topics require robust environment perception. Among other things, camera-based systems are used for this purpose. A central and safety-critical challenge is the reliable perception of vulnerable road users (VRUs), such as vehicles or cyclists, regardless of the prevailing visibility and lighting conditions. To reduce the susceptibility to detection errors, complementary image information from the visible light spectrum and the near infrared spectrum (NIR) can be fused. While a conventional camera will perform to its full detection potential in good visibility conditions, an infrared camera can significantly improve robustness in difficult visibility conditions, especially in darkness, fog, or rain.

Goal

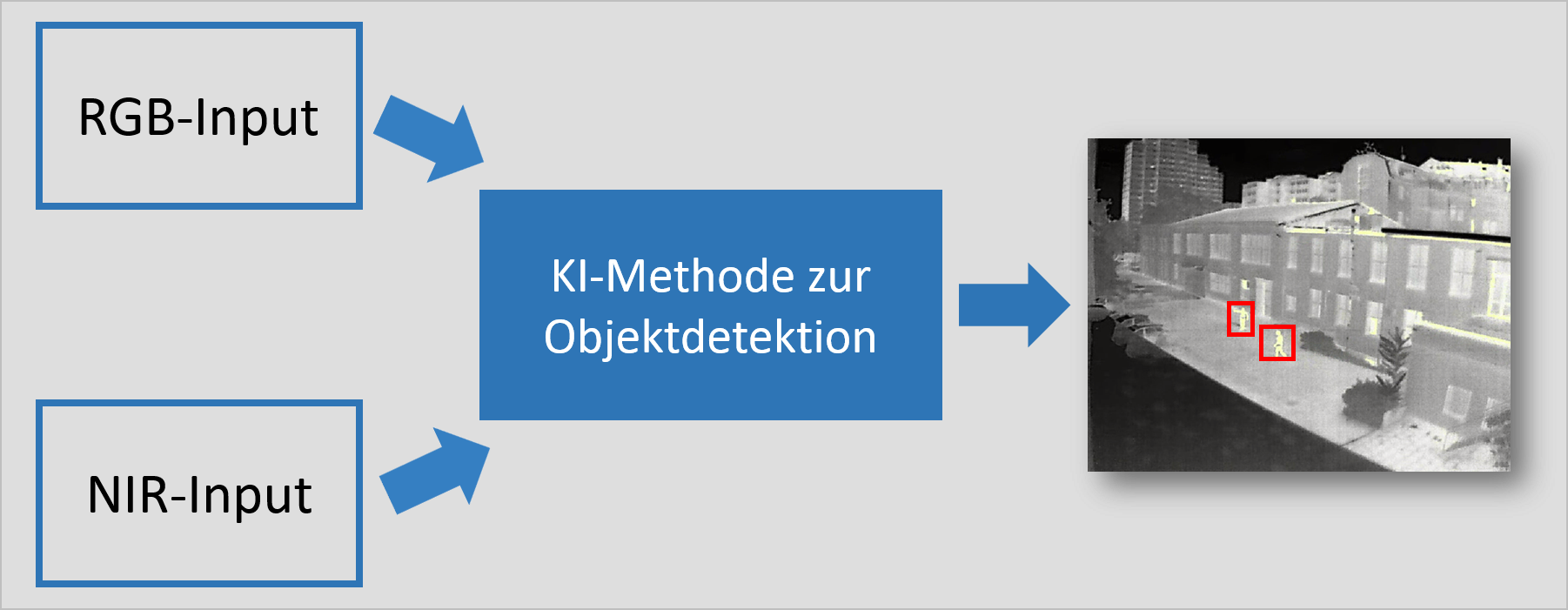

Development of a Deep Learning based AI method that fuses complementary sensor information from the visible light and infrared spectrum to detect VRUs.

Solution expertise

First, a suitable deep learning architecture for static fusion of image data will be selected. The training of the AI method will be done on publicly available datasets as "pre-training" and can then be adapted and subsequently optimized for the corresponding use case using a dataset recorded in the target application.

Test bed

The testing of the developed AI method can take place within the framework of the Test Area Autonomous Driving Baden Württemberg (TAF-BW) from a large-scale infrastructure perspective, or with the help of the automated test vehicle CoCar from the FZI vehicle fleet.

Competence Center AI Engineering

Competence Center AI Engineering